Autopsy of an Indie MMORPG - Part 1

by Matt, posted on Thursday 18 June 2015.

When an indie developer gets a previous project down from the shelf, dusts it off, and inspects what went well and what didn't, it's often titled a 'post-mortem.' Despite having written these kinds of articles before, I'm just not keen on the term myself, the connotation of something lying lifeless in a mortuary seems a tad too negative for me. But for some projects, the vocabulary just... aptly suits it.

I never set out to write an MMORPG, indeed, the game that was eventually named Scarlet Sword to this date still lives in a folder on my harddrive entitled "SummerProject," even though it ended up taking about eighteen months. That's one thing English people just can't be mistaken about; summers here don't quite last that long. No one thinks its a good idea to write an MMORPG. As you're reading this, somewhere in the world, a 14-year-old is typing a forum post on some hobbyist forum, essentially asking for instructions on how to make an MMORPG, and within a few hours will have received the standard replies. First; the snarky cynic joking about a big "make game" button, a well-meaning developer linking to a Hello World tutorial for C++, etc. And as someone who has made games, even with some commercial success, I'd never set out to start something so unrealistic.

This is my experience of accidentally making an MMORPG.

Oh, by the way!

Matt's latest game is called The Cat Machine, and is available to buy right now!

It's a game of logic and cats, I think you'll like it.

A common practise for Computer Science Bachelor programmes is to assign third year students students a Final Year Project, a chance to create something, anything, that interests them, with whatever programming languages and tools they fancy. For anyone that just likes making stuff this is going to be their favourite bit of the whole course. It struck me as tremendously odd that some students chose to write a bog-standard blog engine. There's a dozen blog-engine tutorials for every language a single google away, I remember thinking, given we'd only dipped into fascinating subjects like AI, natural language processing, robotics, machine learning... I remember thinking "is that the most exciting thing you can think of doing?"

For me, what had got my interest was the thought of exploring the architecture of a complex backend for a networked video game, running at non-trivial scale.

Creating commercial browser games was what had piqued my interest in this area originally. In the first year at University I wrote a Flash game for a small web publisher that boild down to a simple noughts-and-crosses/tic-tac-toe type multiplayer game over a crude TCP protocol I had thrown together, with a simple match-making system. It somehow worked. But in my head it was a thousand miles away from the games I wanted to play, let alone the games I wanted to create. Having spoken to creators of real-time multiplayer games, like FPS's and strategy games, I knew that a big difficulty is running the physics simulation, or the game rules, for lots of players. Supporting 64 clients in a complex world is a challenge, even if its one we now take for granted when games like Battlefield and Tribes have existed for so long.

The goal for my final year project was to create a tech-demo (yep, let's see how that goes) for a 2D (that's simpler than 3D! Right?) game that supports hundreds of simultaneous connections.

However! I wasn't setting out to create any ol' MORPG (first M pending at this stage) clone, no, this wasn't just being looked at from a purely technical point of view. there were game design ideals as well. One of my favourite games of my childhood was the GameBoy version of Zelda: Links Awakening, a classic game that focused on very simple mechanics. Explaining the plan for the game to other people I found myself describing it as "like one of those classic 2D Zelda games, except online and multiplayer." That's a game I wanted to play! It's not an original thought at this point, but MMORPG's still have a major gameplay problem. Click on an enemy until its dead, repeat until the level goes up, then repeat that. The grind just isn't fun, it doesn't live up to what playing with friends ought to be. So that was exciting, to take the stripped-down, skill-focused mechanics of an old-school Zelda game, and build a game that faithfully recreated that experience, yet networked, with hundreds of other players in the same world.

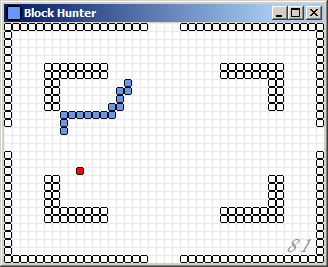

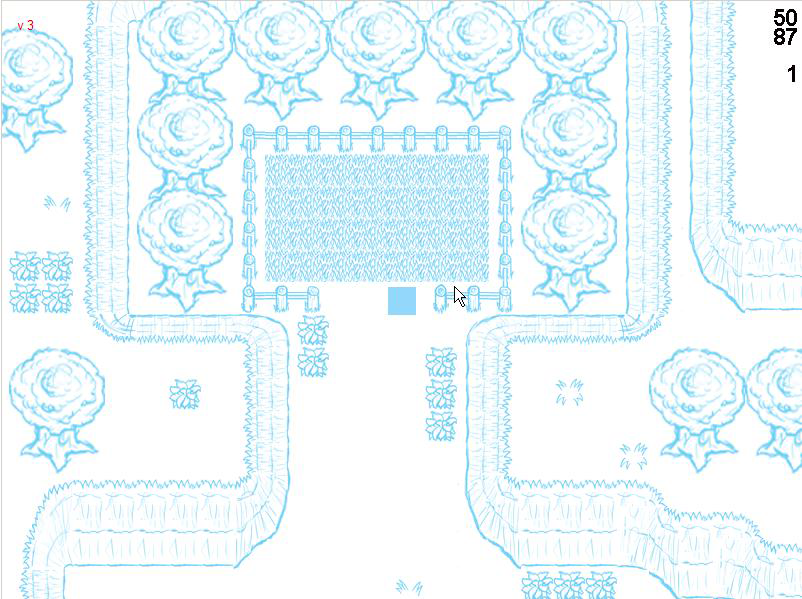

End of my second year, I took a weekend and worked on a prototype, just to see whether this whole plan was going to be feasible. Are you ready? Prepare yourselves:

Beautiful. You'd open that Flash application up on two laptops, and move around with the arrow keys. You'd see those blue-squares move about on both screens. Riveting. But this is how game prototyping ought to be, you want to express the core part of the game design, whether you're trying to solve for a architecture/technological problem, or whether you're solving for fun. In this particular instance, I was building off some core Zelda mechanics, so what I was interested in was just simply, can I pull a networked real-time web game off? Can I come close to delivering on that?

Two blue squares controlled via the arrow keys is not visually impressive, but under the hood there's a fair amount going on. I wrote a Python server, built on top of the Twisted Matrix, a rather enormous networking library. This was connected to a Flash application, generated by Multimedia Fusion 2, a drag-and-drop type tool. Python and MMF2 are both perfect for prototyping, and I'd worked freelance in both of these beforehand, with pleasing results.

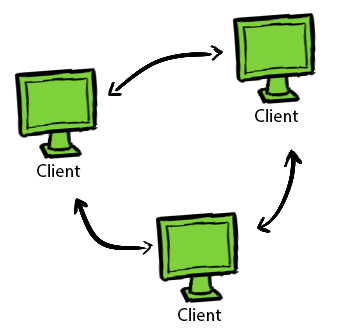

Let's back up a moment, and go through some basic networking theory. You have a bunch of processes, running on different machines, and you want them to talk to one another. The first fundamental choice to make is whether these communications occur peer-to-peer, or via a centralised server.

The best thing about peer-to-peer is the absence of the server. If you're playing Hearthstone and the servers go down, you've got to wait till Blizzard turns them all back on, but with P2P you might spread the addresses of the various players across a hashtable that's shared by everyone, each player taking on part of the role normally played by the server. The problem with equally trusting every player in the network, is that every player is not equally trustworthy. You've probably seen some neat MMORPG web-game concepts in the last few years built on WebRTC. They're incredibly neat, but the issue with every client taking authority over its own state is that it's just not at all difficult to open up the LocalStorage and award yourself the most powerful sword in the game, or set your level to 99, or add a few zeroes on the end of your movement speed. But these tend to be tech-demos, and not intended to be played seriously.

The first game I ever completed was a Snake clone named Blockhunter when I was fifteen. I wrote a horrible bit of php, plugging into a mysql table, to record highscores sent from players, and when I uploaded it to a few freeware sites, it took less than a couple of hours for someone, somewhere to open up a hex-editor, find where the score was stored, and change that value to the maximum that mysql's INTEGER UNSIGNED field type can take. Or perhaps they packet-sniffed the POST request, and sent a duplicate with the value altered in the payload. Either way, more than anything I was amazed that someone took the trouble to cheat at my little snake clone. And if they'll cheat at something so small, when it comes to a game where they play with others, with persistence, that's certainly fair game for the same treatment.

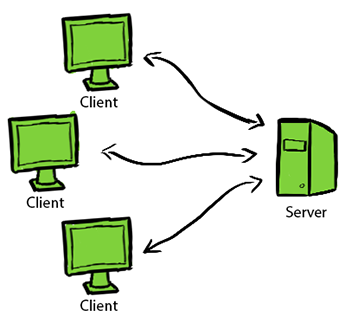

Departing from a peer-to-peer approach, and from authoritative clients, we return to the idea of a centralised server. This immediately sorts out many of our issues with cheating, since all the important game-rule questions, such as "who shot who?", "how much damage was dealt?", "can a player walk through a wall?" are taken out of the clients hands and given to an impartial computer, the server, which they don't have access too. In MMO games, clients then tend to become 'dumb', to a certain extent, merely displaying the current game's state as dictated by the server, take input from the users, and request changes to the game state from the server, to which the server can always say 'no', if it's an illegal state change.

Sounds great then! We're following the golden rule of 'never trust the client!' But the side-effect is now we have to write the server, carving the game-logic away from the application the player has. This, by the way, is why it's so difficult to make an already single-player game suddenly support many more players. There's no 'multiplayer' switch you can flip, it doesn't bolt onto the side easily, it's the sort of thing that has to be kept in mind from the beginning to avoid significant rearchitecting at a later point. And the complexity involved with server development comes with handling all of its tasks with good performance, simulating the game's physics and logic, responding to network requests, reading and writing from the database, and so on. And for MMORPG type games, the difficulty is exacerbated, because all these aspects of the server are being performed for not just sixteen, or thirty-two players, but hundreds of them.

A second, additional slice of trickiness comes when you want to spread multiple instances of the server around the world. And you probably do want to that, for a real-time Zelda-like game, because these games more than most are timings based. The player dodges the arrow at just the right moment, hits the attack button at the last second. If network latencies get above 30ms, the player starts adjusting their buttons to that delay, and starts noticing the sluggishness, and when that delay gets above 100ms, the game starts becoming unplayable. And so in order to let people in America play with the same pleasant conditions as those in Europe, yes, you'll probably want to run identical instances of the servers in different geographical continents. And because you want to avoid conflicts of Truth when it comes to the database, and stop players logging into more than one server at the same time, a master-server application is required for the instance-servers to report to.

So, a centralised server may solve all our nasty cheating problems, but in the same breath we've radically increased the amount of engineering we've got to do, and we've now got the responsibility of keeping some server machines up and running.

For completeness's sake, I should note that the client-has-authority and server-has-authority approaches are not mutually exclusive. One popular thing to do in fast-paced games is to let clients make some decisions about the game state, and have the server 'check' a portion of them. So the client might be allowed to dictate how its moved, with the server keeping an eye on the speed the player appears to be moving, and whether any collision objects are being ignored. The big advantage of doing something like this is that it takes a processing-expensive part of the physics simulation and runs it on the player's (probably quite powerful) machine, and if that player starts cheating, the server (in theory) will catch the illegal state changes and boot them from the game. This tends not to be employed by MMORPG games as much, however, because even if a cheating player spoils a match in an FPS, ten minutes will pass and the game will be restarted. An MMORPG has too much persistence involved to give important decisions like damage calculation/hit collision to the player.

But back to my Zelda-inspired multiplayer game. One reason I felt so comfortable with picking Python was that I knew the big space MMO, Eve Online, was largely Python-powered on backend. They use Stackless Python, a Python-like that adds some extra concurrency sauce. If they can simulate a majorly complex universe with massive interstellar conflicts involving thousands of players, my little project ought to be fine. I had also had experience in the previous few years with Cython, another Python flavour, this time with statically typed syntax added, compiling parts of the code to C, which was very pleasant (if a bit disorienting to see static type declarations in Python code). Personally though, I hoped to avoid Stackless Python, Cython, Iron Python, because Pypy, a Just-In-Time Compiler for Python was becoming quite mature, and was compatible with the Twisted Matrix networking library. Just-In-Time compilation involves running the code, looking for routines that run the most regularly, and compiles them at run-time. Essentially, it's 'free' optimisation, with no changes to the Python code necessary. (Okay, not really free, because there's a warm-up time involved, but for long running processes, it's ideal.)

Now, premature optimisation is a grave sin, in software engineering. But to begin with this was a university project, and there's nothing university project markers like more than graphs and tables, so for their benefit more than mine, I wrote a bit of code in these various flavours, to record (roughly) the relative computation speeds. The code I chose was an implementation of the A* pathfinding algorithm, suitable because it's used in games so frequently, and it's sufficiently expensive computationally due to the recursion. I also gave wrote an implementation in Java as a base-line, because Universities love Java, for some reason.

| Execution Method | Average over 100,000 executions (ms) |

|---|---|

| Python 2.7 | 1.80706 |

| Cython 0.17 | 0.60112 |

| Pypy 2.0 | 0.71504 |

| Java 7 | 0.29150 |

It should be noted that this table is a bit silly, the Java code was certainly not like-for-like with the Python code, and really shouldn't be compared. And it's sillier still now, because this was 2012, Pypy 2.0 had only just been released as Beta, and they've all had a number of new version updates since then.

But what surprised me, was Pypy's excellent performance, narrowly beaten by Cython. Had the A* routine only been run for a few iterations, Pypy would have been much closer in performance to standard Python, but give it some tightly nested code, and a few minutes to mark it all as 'hot,' and some rather heavy optimisations emerge. Good news for server-code.

Okay, now the University professors are happy, sat in a corner with a table of numbers, we can return to the prototype.

Over the course of the summer, I expanded the prototype, confidently working in Python still, and still utilising MMF2 because it was just easy, and it exported to Flash so well. Flash was still ubiquitous in 2012, despite five years of identical "Flash Is Dead" blog posts running before that. Happily that's a bit more true now, even if web-games that the masses play haven't got that memo.

By the start of my third-year, when most students tend to start thinking about their projects, here's how the game looked:

The blue squares still remain, but much has changed! The most visually striking difference is that I'm streaming in both map data and a tilemap from a webserver serving the static files through nginx. Divorcing the map data from the game client is a lovely thing to do, because updates to the world can take place whilst avoiding forcing player's to re-download the entire Flash app again. The graphics are all very sketchy, though I'd later use them as roughs for the final line-art. The blue-lines-on-white aesthetic, although temporary, actually gathered a fair amount of positive feedback, a reminder that unique graphical choices are a great benefit for getting some attention.

A larger change, as the server was expanded, was server-side collision. In the prototype, the blue squares wandered around in the white void, where there was nothing to collide with, but as soon as you start dealing with obstacles, the issues we've previously discussed concerning authority come into play. "Can I move here?" It's a simple enough question, but anyone who played Counterstrike back in the day will know it's crucially important. Now, if I were to implement the collision routine today, without thinking I would jump into writing a Quadtree implementation, and be done with it, but I was less familiar with standard ways of doing things then than I am now. At the start I was just colliding with static objects, so on the server I was comparing with the tiles immediately around the player, which at that early stage was quite acceptable.

Once the collision check was done on the server, the same check can be performed on the clientside. There's no need to send the server a "can I move to the right?" if the player is directly to the left of a wall, so by implementing the collision checking on the client as well as the server, we save a few packets here and there.

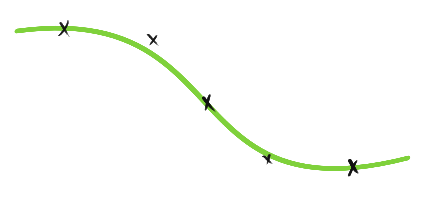

But that's not the only benefit we get from collision checking on the client. If the client has an awareness of how game objects interact with one another, we can smooth out the movements of other player's sent by the server, and interpolate our own movements into the future. If I send a "I'd like to move north" request to the server, I don't have to wait till the server comes back to me with an affirmative, if I know there's no obstacle in front of me, I can start moving at once, and reduce the latency between button press and the movement beginning. Interpolating the position of objects sent by the server is an interesting trade-off. The smoother function you apply to interpolate on a set of coordinates, the smoother the movement appears, but if the object makes unexpected movements (ie, movements not along the predicted curve) there can be an unpleasant 'elastic-banding' effect, that anyone who has played online games over a shoddy connection is all too familiar with. Eventually, much later on in development, I worked out by trial and error that different settings just suit different objects better. Linear interpolation is perfect for projectiles that follow a very standard straight-line, cubic interpolation suits players, and bats, which moved in circular arcs around the player would look best with cubic interpolation with a heavy smoothing value.

Lots of the fundamentals shared by most networked games at this point were getting implemented, and it was playing nicely. This was the stage of the project when what should have happened was to carefully sit down and decide, what's the scope of this project? Instead, it just tumbled along as I began work on fun looking features.

In the next part of this blog, we'll continue the progress as graphics are added, tools are created, and... bugs. Lots of bugs were encountered.

Written by Matt.

Who is also on Twitter.

His latest game is The Cat Machine, a game of logic.

And cats.

It's available to buy, right now!